How Much Monthly Traffic Do You Need to Start A/B Testing?

If you're wondering whether your site has enough traffic to run A/B tests, you're not alone. It's one of the most common questions we get. And here's the short answer:

The more traffic you have, the easier it is to run reliable A/B tests. But even if your traffic is low, that doesn’t mean you can’t test, you just need to test differently.

What’s the Point of A/B Testing?

A/B testing is all about making better decisions. You show version A to half of your visitors, version B to the other half, and see which one performs better, usually measured by conversion rate.

Sounds simple? It is. But to trust the results, your test needs to be statistically significant. That’s a fancy way of saying: you need enough traffic for the results to be real, not just a lucky streak.

What Affects How Much Traffic You Need?

There are 3 main things that decide how much traffic your test needs:

- Your current conversion rate

A lower conversion rate usually needs more traffic to detect changes. - The size of the change you’re expecting

Big changes are easier to detect (and need fewer visitors). Small changes take more traffic. - The confidence level you want

Most people use 95% confidence, meaning you're 95% sure that the result isn’t random. You’ll also hear about “power” (usually 80%). Don’t worry, most tools handle this for you.

A Quick Example

Let’s say your current conversion rate is 2%. You want to test a new headline and hope to see a 10% uplift in conversion (2% → 2.2%).

Using a sample size calculator (like AB Test Guide), you'll probably need around 60,000 visitors PER VARIATION to detect that kind of uplift confidently.

That’s 120,000 total visitors for just one test.

So, How Much Monthly Traffic Do You Actually Need?

Here’s a simple breakdown:

🟥 Less than 10,000 monthly visitors → Too small for reliable A/B testing

You’ll need a huge change (like a 30%+ improvement) for the test to detect anything. It’s not impossible, but very hit-or-miss. You're better off testing bold changes or improving other parts of your funnel first.

🟧 10,000 to 100,000 visitors/month → Challenging, but possible

You can run tests, but aim for big changes, not micro-optimizations. Don’t waste time tweaking button colors, test different page layouts, messaging angles, or entire landing pages. Give your test a fighting chance.

🟨 100,000 to 1,000,000 visitors/month → Ideal range

You can start running iterative tests, like refining headlines, CTAs, or checkout flows. You’ll be able to detect even small changes and run multiple tests a month.

🟩 1M+ monthly visitors → A/B testing playground

You can run lots of tests at once. Great for testing smaller tweaks, segmenting audiences, and speeding up your experimentation velocity.

What If You Don’t Have Enough Traffic?

No traffic? No problem, kind of.

If you're below 10k visitors/month, A/B testing should not be your primary optimization tool. Here's what to do instead:

1. Run bold “innovative” tests

You won’t have the numbers for small changes. But if you test a totally new page design, pricing structure, or product messaging, even a small amount of traffic can show a clear trend.

2. Use directional signals

Even if you don’t reach full statistical confidence, you can still learn what might work. Use tools like heatmaps, session recordings, surveys, and user tests to validate ideas before you commit.

3. Optimize for micro-conversions, but measure the right outcome

Don’t just track button clicks. Make sure you're measuring the end goal, sales, signups, revenue, and not just the steps before it.

And if you still want to test? Run bigger changes. Don’t test button colors, test entirely different offers, layouts, or funnel steps.

Bayesian Thinking: Better for Real-World Decisions

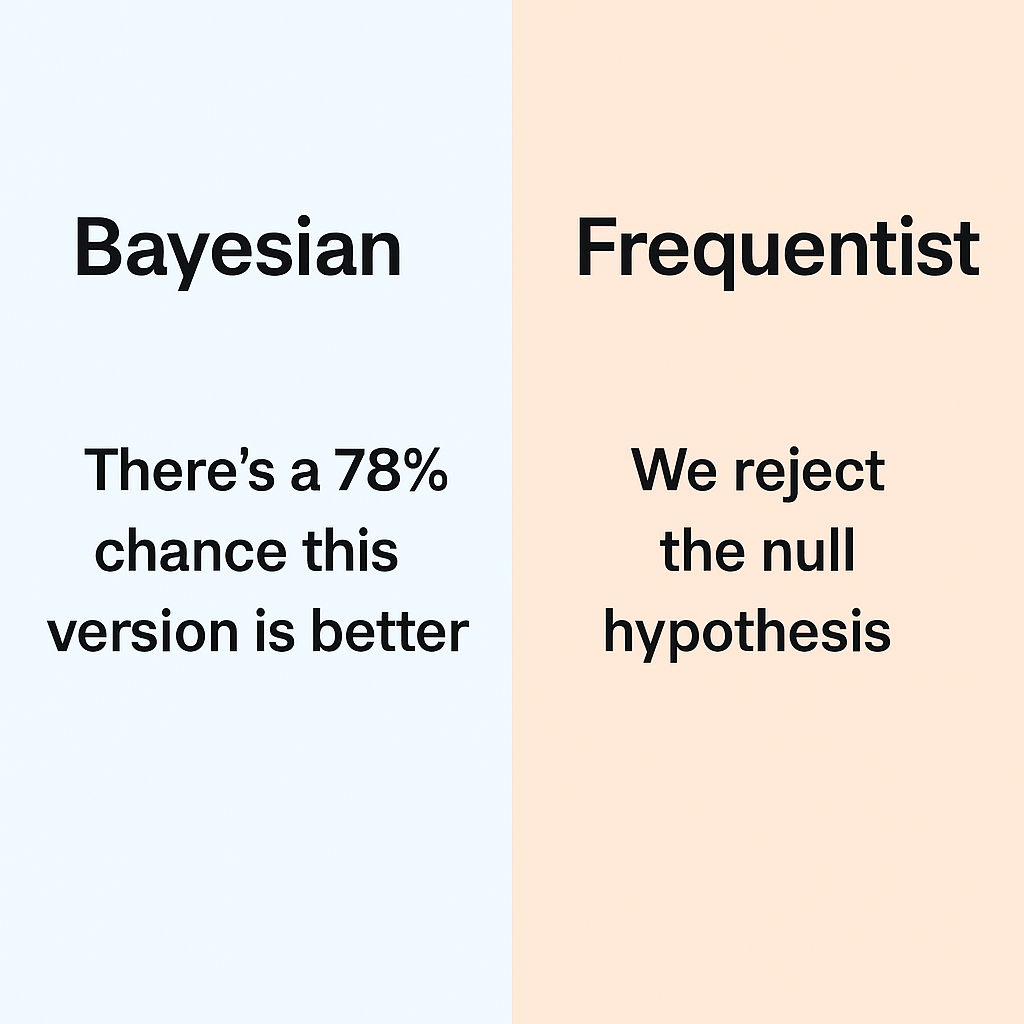

While many A/B testing tools today support both Frequentist and Bayesian approaches, it's important to understand the difference. Frequentist statistics give you a binary “yes or no” result, was the lift statistically significant or not? That’s fine if you have high traffic and a stable environment, but it’s rigid and often impractical for smaller sites or fast-moving teams.

Bayesian testing, on the other hand, is more flexible and realistic. It gives you probabilities instead of black-and-white answers. For example, instead of saying “B wins,” it might say “There’s a 78% chance B performs better than A.” That’s more helpful when you're making business decisions based on imperfect information.

At Mida, we lean toward Bayesian interpretation. It aligns better with how businesses operate, in shades of confidence and trade-offs, not just p-values.

Look at A/B Testing as a Signal

This is key: don’t let stats paralyze you.

A/B testing is just one tool. It tells you if there’s likely an improvement. But business context matters more:

- Will implementing the change slow down your dev team?

- Does it align with your brand or long-term strategy?

- Even if the data is inconclusive, is it directionally promising?

Sometimes, you move forward with a variation even without a clean statistical win, because it's better for the user experience, cheaper to maintain, or aligns with your roadmap.

Final Thoughts

You don’t need millions of visitors to get value from A/B testing. But you do need to be smart about when and how you use it.

If your traffic is low:

- Focus on bold, meaningful changes.

- Prioritize UX and qualitative research.

- Consider Bayesian stats for more flexible decision-making.

A/B testing isn’t a magic bullet. It’s a compass. Let it guide you, but don’t let it stop you from making smart business calls.

.svg)