Why A/B Testing Is the Missing Infrastructure Layer for LLM Products

AI companies today are building sophisticated products on top of Large Language Models (LLMs) and complex agentic workflows. The demos look impressive. The pitch decks look revolutionary. And the prototypes feel magical.

But underneath the surface, your AI stack is far more fragile than anyone likes to admit.

In this post, we’ll break down exactly how modern, multi-step AI workflows actually operate, illustrate why this chain-based design leads to compounding risk and silent failure (what we call drift), and explain why A/B testing is rapidly becoming the new critical infrastructure layer for any AI product that demands reliability, scalability, and enterprise trust.

1. LLMs Don’t “Execute Logic”-They Predict Text

To understand AI fragility, you must first reset your mental model of the core engine.

Unlike traditional software, which is deterministic (e.g., 2+2 always equals 4), LLMs are probabilistic. They do not follow explicit instructions like a program; instead, they operate by predicting the next most likely token based on the input and their massive training data.

This fundamental difference means the guarantees you rely on in traditional software development vanish:

- There is no “always works” guarantee.

- The same prompt can produce different outcomes across time or context.

- Small changes in context can lead to major, unpredictable changes in behavior.

- Model providers frequently update their underlying models, causing unpredictable output shifts without you changing a single line of code.

Every AI company knows this, whether they say it out loud or not. But this base-level instability is only the beginning of the problem.

2. Agentic Workflows: Compounding Risk by Design

Most sophisticated AI products today don’t rely on a single, one-and-done model call. They rely on a chained sequence of multiple agents, often called an agentic workflow. Each agent performs a specialized, critical step, turning a complex user request into a final output.

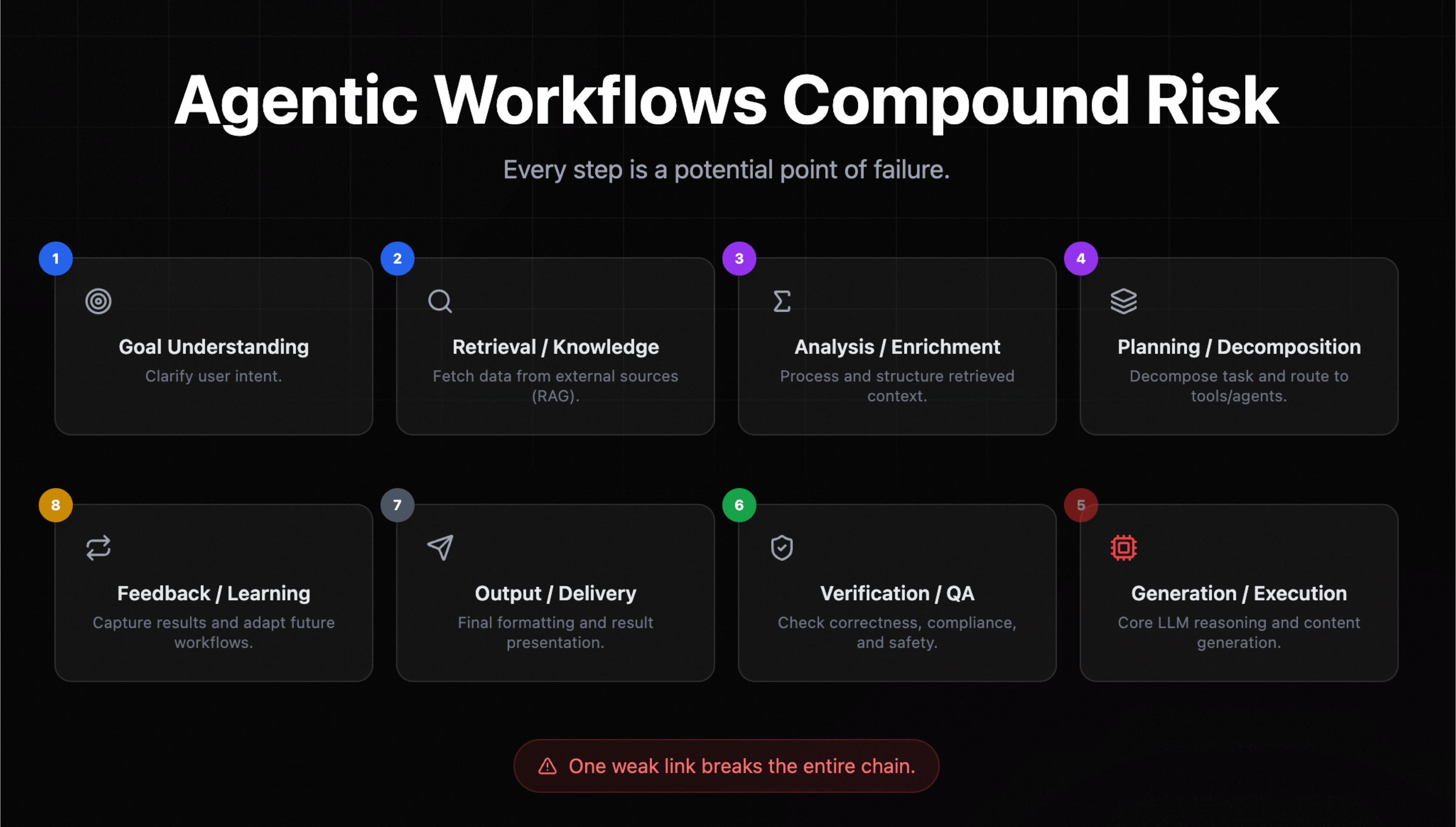

The diagram you need to internalize looks something like this:

As the visual clearly indicates, this approach is context engineering at scale, and every additional step introduces another potential point of failure.

A typical multi-agent workflow flows sequentially, with the output of one step feeding as the input to the next:

- Goal Understanding: Clarify user intent.

- Retrieval / Knowledge (RAG): Fetch data from external sources.

- Analysis / Enrichment: Process and structure the retrieved context.

- Planning / Decomposition: Decompose the task and route to tools/agents.

- Generation / Execution: Core LLM reasoning and content generation.

- Verification / QA: Check correctness, compliance, and safety.

- Output / Delivery: Final formatting and result presentation.

- Feedback / Learning: Capture results and adapt future workflows.

In this architecture, one tiny drift anywhere can lead to catastrophic failure at the end. An error in step 2 (retrieval) might be compounded by step 3 (analysis), leading to a completely wrong result in step 5 (generation) that is missed by step 6 (verification).

3. What Is “Drift” and Why It’s Your Silent Product Killer

Drift is when your AI system subtly changes its behavior over time, even if you, the developer, haven't manually changed its prompts or code.

Drift is not a crash; it is not a clear error. Drift is a slow, silent shift in behavior that destroys product reliability.

There are several critical types of drift that occur across the agentic workflow:

A. Model Drift

- The Cause: The underlying model (e.g., from OpenAI, Anthropic, etc.) is updated by the provider.

- The Effect: Your prompts stay the same, but the model's new weights cause your outputs to suddenly shift in tone, length, or compliance.

B. Prompt Drift

- The Cause: You or a teammate slightly tweaks a system prompt to fix a specific bug.

- The Effect: The change unexpectedly alters the model’s behavior everywhere else, introducing new, harder-to-spot regressions.

C. Context Drift

- The Cause: Live data (from retrieval, user input, or agent-generated context) shifts subtly in its structure or relevance.

- The Effect: The AI begins failing in unexpected ways because the input data it relies on is no longer what the prompts were optimized for.

D. Agent Drift

- The Cause: One agent in your chain changes its behavior (due to any of the above reasons), breaks an assumption, or misinterprets the input it receives from the preceding agent.

- The Effect: The entire chain of execution fails downstream, leading to broken JSON schemas, skipped steps, or irrelevant final outputs.

The danger of drift is that you rarely notice it in isolation. You only notice it when your product metrics dip, support tickets spike, or, worst of all, when your customers complain about "broken chains" or "higher hallucination rates."

4. Why A/B Testing Is Now Critical Infrastructure for AI Products

Traditional software development relies on guarantees: unit tests, static typing, and clear failure modes. AI systems offer none of these.

What AI systems need instead is live, real-world evaluation at scale-a function only A/B testing can reliably provide.

The A/B Testing Mandate

A robust A/B testing layer becomes the runtime validation framework for your entire agentic stack. It allows you to:

Use Case: Safely Test Cheaper Models (Cost Savings)

This is one of the most powerful and immediate use cases. You want to swap from a costly model (e.g., Claude Sonnet 4.5) to a smaller, faster model. Without A/B testing, this is a terrifying leap of faith.

With A/B testing, you can route 10% of your production traffic to the new, cheaper model. You then compare key metrics: hallucination rate, completion rate, customer satisfaction, or a proprietary quality score.

The best outcome often isn't a higher score; it's “no difference.” If the quality stays the same, you gain a massive cost saving with quantified certainty.

5. The Core Message: AI Needs Stability, Not Hope

You can’t rely on intuition. You can’t rely on a successful demo. You can’t rely on "it worked yesterday."

In AI, the most valuable outcome isn’t a marginal improvement-it’s certainty.

Certainty that:

- Your workflow hasn’t silently drifted.

- Your agents are behaving as intended.

- Your prompt iterations are safe.

- Your model upgrades are non-regressive.

- Your product's output remains stable and predictable.

If CI/CD (Continuous Integration/Continuous Delivery) made software scalable and deployable, A/B testing will be the infrastructure that makes AI reliable and trustworthy.

The companies that succeed in the next generation of AI product development will not be the ones with the flashiest prototypes, but the ones who treat their probabilistic, chained-agent workflows with the rigorous infrastructure they demand. They will be the companies that test relentlessly.

6. The Future: The Companies That Win Will Be the Ones That Test Relentlessly

The AI products that dominate tomorrow will not be the ones that rely on hope and intuition, but the ones built on a foundation of certainty. They will require reliability, predictability, observability, and consistency-qualities that the probabilistic nature of LLMs actively resists.

This requires a new, dedicated layer of infrastructure that moves beyond traditional QA and embraces continuous, live-traffic experimentation.

If CI/CD made software scalable, A/B testing will make AI reliable.

Embrace Certainty with Mida: The A/B Testing Infrastructure Built for the AI Age

The choice is clear: you can wait for your customers to find the drift, or you can preemptively secure your product with a modern A/B testing solution. For AI companies needing to test their complex, multi-agent workflows-from subtle prompt tweaks to massive model swaps-platforms like Mida offer the essential infrastructure layer you've been missing.

Mida is built for the pace of AI iteration, giving your product and engineering teams the power to:

- Test Everything: Compare entire agentic chains, single agent logic, specific prompt variations, or retrieval strategies side-by-side in production.

- Prevent Silent Drift: Use live, real-world data to spot regressions or cost increases instantly when swapping models or updating providers.

- Optimize Cost and Latency: Safely test cheaper, faster models (e.g., swapping a high-cost Claude Sonnet 4.5 step for a smaller, specialized model) and quantify that the quality remains stable.

- Accelerate Iteration: Leverage its AI-powered features and lightweight design to launch experiments faster and analyze results with greater statistical confidence than ever before.

AI isn’t magic. AI isn’t predictable. AI isn’t stable by default. But with the right infrastructure, it absolutely can be.

Stop relying on demo-level results and start building enterprise-grade confidence. Mida gives you the measurable certainty you need to scale your LLM product without fear of the inevitable drift.

Ready to transform guesswork into guaranteed product stability? Start your AI experimentation journey with Mida today.

.svg)